- Building AI Agents

- Posts

- Can agents be machine learning engineers?

Can agents be machine learning engineers?

OpenAI evaluates AI agents as ML model builders, LLMs are the new CPUs, and more

Welcome back to Building AI Agents, your biweekly guide to everything new in the AI agent field!

Although I’m very bullish on AI agents’ potential, some claims about them seem a bit far-fetched. Come on, 2026 at least.

In today’s issue…

Testing agents’ ML engineering potential

Microsoft rolls out healthcare agents

How to build agents that pay humans

Are LLMs the new CPUs?

…and more

🔍 SPOTLIGHT

Source: ArXiv

With the AI boom in full swing, machine learning engineering has been a bright spot in an otherwise dismal job market for software engineers. Now, OpenAI is coming to try to spoil the fun.

In a new paper entitled MLE-bench: Evaluating Machine Learning Agents on Machine Learning Engineering, the company introduces a benchmark for assessing AI agents’ capabilities at autonomously building ML systems. MLE-bench consists of 75 curated questions obtained from competitions run on the popular data science tournament site Kaggle, in which users compete to engineer and train ML models to predict the labels of given sets of data.

OpenAI’s researchers constructed a series of agents using the AIDE, ResearchAgent, and CodeActAgent scaffolds, powered them using the company’s new reasoning model o1-preview, as well as the more traditional frontier LLMs GPT-4o, Llama 3.1 405B, and Claude 3.5 Sonnet, and gave them 24 hours to train machine learning models to address each challenge. Not surprisingly, o1-preview performed by far the best, achieving a bronze medal in 16.9% of cases, rising to 34.1% when the best of 8 attempts was allowed. Agent performance also rose if given additional time to train the model—GPT-4o medaled in only 8.7% of cases when given 24 hours, but 11.8% when given 100 hours.

Although OpenAI’s results are promising, the agents often failed to create valid submissions, and the scores obtained by even o1-preview were above median human performance in only 29.4% of cases. A typical ML engineer would be expected to perform at at least the level of the median participant, and likely considerably higher, meaning that LLMs are not ready to step into the shoes of human professionals just yet. Additionally, Kaggle is widely considered in the ML community to be an incomplete proxy for real-world data science challenges, where model building is of secondary importance compared to data cleaning and pre-processing. Nevertheless, MLE-bench constitutes an important new yardstick for measuring AI agents’ performance on these tasks—a performance which is sure to increase rapidly.

If you find Building AI Agents valuable, forward this email to a friend or colleague!

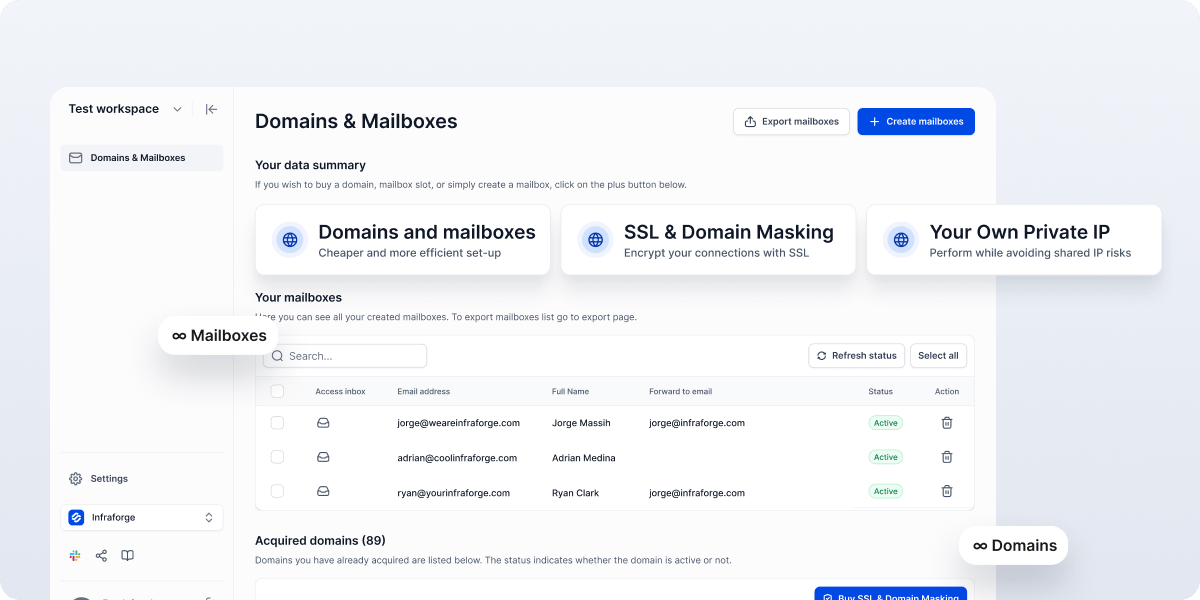

Presented by Salesforge

The first search engine for leads

Leadsforge is the very first search engine for leads. With a chat-like, easy interface, getting new leads is as easy as texting a friend! Just describe your ideal customer in the chat - industry, role, location, or other specific criteria - and our AI-powered search engine will instantly find and verify the best leads for you. No more guesswork, just results. Your lead lists will be ready in minutes!

📰 NEWS

Source: Microsoft

The tech giant has made a dedicated healthcare agent service available in Copilot Studio, allowing healthcare organizations to create AI agents to conduct routine tasks for doctors and patients, and released a new suite of multimodal LLMs dedicated to biomedical image analysis.

Leading agent framework provider CrewAI has launched its 0.70.1 update, introducing Flows, a method for creating asynchronous workflows that can coordinate multiple tasks, such as LLM calls and code execution.

The company’s researchers ignited a firestorm of debate last week with a new paper claiming to demonstrate that large language models are incapable of engaging in symbolic reasoning as humans do.

🛠️ USEFUL STUFF

Source: Payman

A compilation of example use cases for Payman—covered last week in Building AI Agents—which enables agents to engage in real-world financial transactions with humans.

On October 21-23, CrewAI will be conducting a live lecture and demo series on the use of its technology to automate business operations.

A new package which allows users to conduct tasks on a user’s computer using web knowledge, memory, and planning, and evaluate it on benchmarks of computer control tasks such as OSWorld and WindowsAgentArena.

An agent framework just released by OpenAI to allow users to build simple agents with the company’s models; it is mainly meant as an educational and prototyping tool and not for production use cases.

💡 ANALYSIS

Source: Flickr

Just as CPUs serve as the general-purpose core of today’s computers but require external memory and other specialized components to accomplish their tasks, this piece argues that LLMs are the core of future computing systems—powerful, but unable to function on their own without being supported by other modules.

This piece reviews the efforts that major tech companies are making in the AI agent domain and argues that the investment is being driven in part by a need to justify the massive investments they have made in AI.

The NVIDIA chief predicts that the future of the economy is one in which human workers will act as the executives of teams of agents.

🧪 RESEARCH

Source: ArXiv

The authors of this paper introduce Prompt Infection, a malicious prompt which spreads among interconnected agents like a computer virus, as well as a defense mechanism designed to guard against its spread.

Thanks for reading! Until next time, keep learning and building!

If you have any specific feedback, just reply to this email—we’d love to hear from you

Follow us on X (Twitter), LinkedIn, and Instagram