- Building AI Agents

- Posts

- How to pick the right model for your AI agents

How to pick the right model for your AI agents

Plus: A video tutorial on building a basic agent with Google’s new model, using agents to run businesses, and more

Edition 139 | November 24, 2025

Wrong, the best model for any use case is “one that isn’t 6 months out of date by the time your company’s lawyers approve it for use.”

Welcome back to Building AI Agents, your biweekly guide to everything new in the field of agentic AI!

🦃 In observation of Thanksgiving, there will be no issue of Building AI Agents this Thursday. Eating turkey and stuffing is a job I’m not willing to hand off to the robots

In today’s issue…

How to choose the right LLM

Not everyone wants an agentic Windows

Building a basic agent with Google’s new model

15,000 MCP servers for every use-case

Using agents to run businesses

…and more

🔍 SPOTLIGHT

If there’s one aspect of agent design that builders get the most unnecessary stress from, it’s model selection.

On some level, this is understandable: so much of the discourse in AI revolves around who has the hottest new LLM that cleans up on all the benchmarks, but, in my experience, model selection is actually the least critical phase of agent design.

That doesn’t mean that it’s not important to get it right.

The first thing you should do, before even reviewing the latest model offerings, is to get a clear idea of what your agent will actually be doing, and coming up with a standard evaluation that you can use to compare the models. For example, if your agent’s job is to look over all of your emails and meeting notes from a prospective client and draft a statement of work (SOW), then find, say, 5-10 examples of previous SOWs you’ve prepared for clients, as well as all of the documents you used to draft each one. It’s important to include enough test cases that you can get a good sense of how the agent performs across various scenarios, but not so many that its work will be a pain to review manually.

For each example, come up with a rubric of things that should and shouldn’t be included in the correct answer; or, if it’s a simpler problem like “extract the vendor name from the email”, just write down the correct value you want it to find. You now have the answer key to the “exam” you’ll be giving to your agent.

Now you can actually begin testing out different models. The important thing to note here is that the field is moving so quickly that any specific advice on which is the “smartest” will quickly become useless. Since last Tuesday, that crown probably goes to Google’s Gemini 3 Pro, but that may not be true in weeks or even days, and just because a model does well on (easily cheated) benchmarks doesn’t necessarily mean it will work well for your use case.

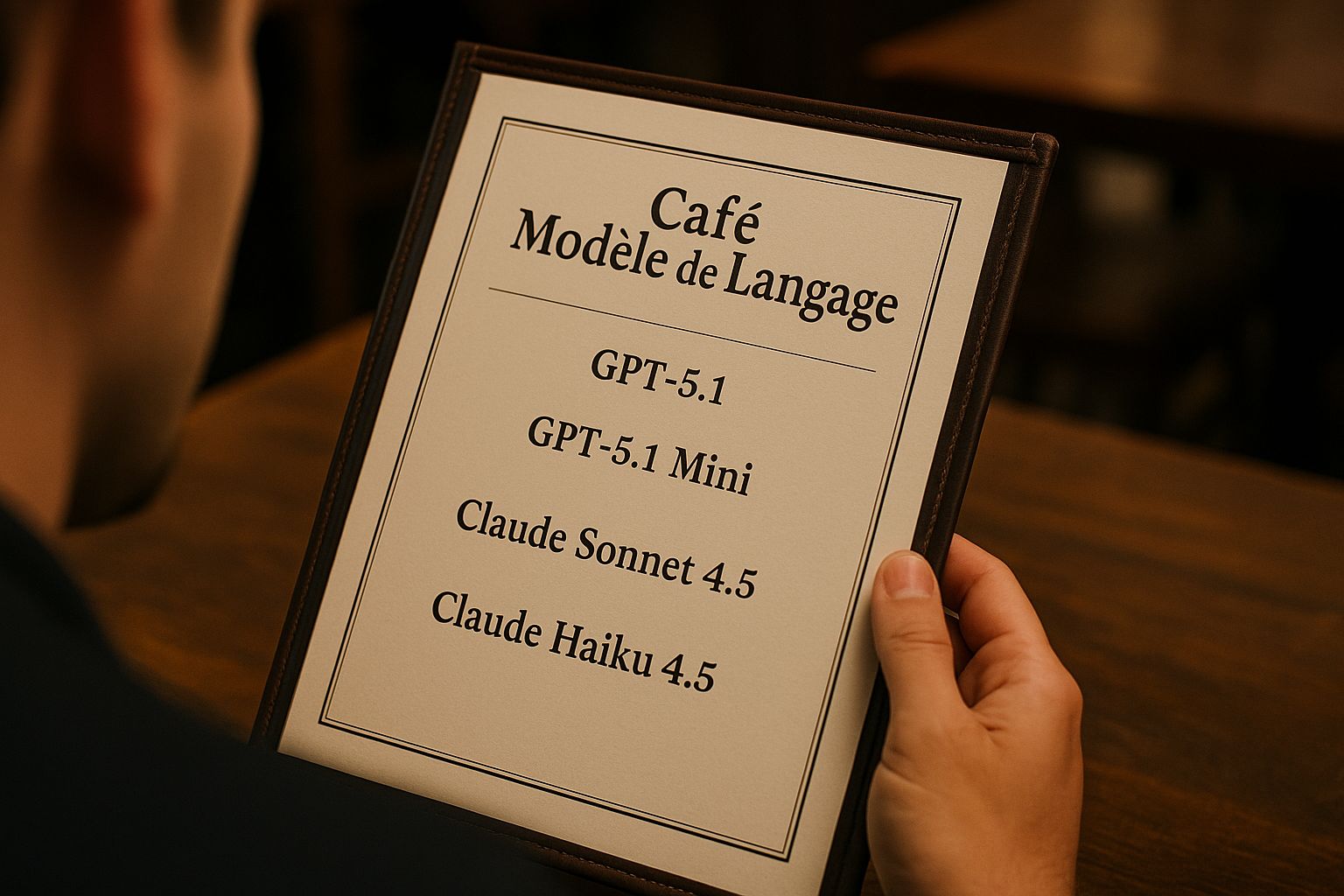

Start with the Big Three providers: OpenAI, Google, and Anthropic. If you want to widen your search, Mistral, xAI, Meta (through the open-source Llama series), Alibaba (through Qwen), and DeepSeek are the major second-tier options, but 99% of the time, the Big Three will meet your needs.

Test one model from each company, beginning with their higher-tier offerings, like OpenAI’s GPT-5.1 instead of GPT-5.1 Mini, even if it feels expensive. There’s a reason for this: if your agent can’t do its assigned job with a smart model, then you’re asking too much of it and need to go back to the drawing board. Start smart and expensive, then see if a dumber, cheaper model can still do the job.

Run each model through a couple rounds of your evaluation, tweaking its prompt each time to cover common mistakes you see it making. Sometimes, one will clearly emerge as being better at understanding the task than the others. Often, though, each will have its own quirks and none will be the obvious winner. In my experience, which specific model you use is much less important to the agent’s success than how well you prompt it, and the tools and context you give it.

As the Big Three—and other providers—roll out new models, continuously test them using your evaluation, adopting them if they offer a clear advantage. Your evaluation, in turn, should also be constantly evolving as you add problems that your agent has failed to solve in actual use. You may find that a new model is up to the challenge.

Ultimately, if there’s one thing you should take away when it comes to model selection, it’s this: don’t stress too much about which one to choose, but make sure that you give each a fair shot.

Always keep learning and building!

—Michael